Free template for AI Guidelines for your IT Security and Cybersecurity compliant with ISO 27001, SOC 2, GDPR, NIS and EU AI Act (AI Regulation).

Developing an organization’s IT Security Policies, complete with legal review, can be a lengthy endeavor where a single policy can require several days of effort.

Streamline your process, save valuable time and money by using our free AI Guidelines template.

The use of artificial intelligence (AI) and machine learning is increasing dramatically in daily work. Everything from IT support in applications to organizations’ own AI projects.

AI can help organizations and employees by providing greater data insights, better threat protection, more efficient automation, and improved technology interaction. However, if misused, AI can be a detriment to individuals, organizations and society at large.

It is therefore important to create rules for how AI is to be used and handled in the organization and in most cases one chooses to add a new AI Policy to the policy collection. But in many organizations, especially the smaller ones that do not have their own AI development, it is enough to only create guidelines for AI use.

What do the AI Guidelines need to contain?

Basic guidelines and principles for AI tools in the organization

- Organizational, company and customer data must be protected at all times in all contexts where AI tools are used. ChatGPT and other similar AI tools are not safe places to use sensitive data, as more and more organizations are finding out the hard way. Be extremely careful about what is entered, especially when it comes to financial data, employee surveys and other similar sensitive material.

- AI tools must be approved by the IT department before they can be used in daily work. New tools appear at a rapid pace, not all of them are reliable and not all of them will be available in even six months. AI tools must therefore be unconditionally tested and evaluated before they are used in the work. If uncertainty exists, don’t use them, instead try to find a better alternative.

- Use of AI in public administration. The use of AI presupposes that the public administration is responsive to identify AI’s consequences for citizens, citizens’ rights, society and the environment. The public administration must also guarantee that AI technology promotes the general goals of the administration, such as an open, equal administration that supports active citizenship, the efficiency and effectiveness of the administration, and a society based on trust and respect for fundamental freedoms and rights.

- The organization’s AI use must be evaluated, developed and updated continuously. We constantly evaluate AI risks, the suitability of AI applications and the decisions their algorithms make. We develop our AI solutions and our own business based on evaluations. We correct distortions quickly and share openly in the organization of our observations. We assume that our partners also act responsibly and feel correspondingly.

Guidelines for the daily use of AI tools in the organization

- You as a person are responsible for the decisions. A human is always responsible for the result when we use AI in our organization. When you create content and develop services, you are always responsible for the choices that AI makes. The responsibility issues regarding AI are no different from those regarding other processes in the organization. The same applies to the requirements for reliability.

- Always have your and the organization’s goals and target groups in focus. An important subgoal of all content should be to build trust in your and your organization’s operations. AI can help you get the job done faster but is just an assistive technology that does the grunt work for you. If it is abused or misused, it will do more harm than good to you and your organization.

- Everything must be edited by you before you publish it. The content produced needs to be vetted and reviewed by real human eyes to make it sound like you and feel like you. You have to stand behind everything you say, but it’s hard to do that if it’s been composed by an AI.

- Always fact-check every claim you make. AI tools sometimes “hallucinate”, which means they can not only make up facts, but also make up the sources of those made-up facts. Researchers aren’t sure why, but for example ChatGPT can cite research that never happened, reference books that don’t exist, and refer to court cases that never happened. It is up to you and your team to verify everything with proper fact-checking and critical thinking.

- Be aware of potential biases in your AI-generated content. Feel free to share opinions and insights from your colleagues and consult with your manager if you are unsure. It is important in all contexts to only publish content that you and your organization can stand behind.

- Run a plagiarism check to make sure your AI tool isn’t stealing someone else’s work. Some AI tools have plagiarism checks built in, but many do no checking at all. In case of uncertainty, use independent tools where you can, for example, paste text and get a percentage point for how much is plagiarized.

- Always notify your manager if you discover recurring and/or serious inaccuracies or abuse with/of an AI tool. The technology behind the AI tools is still young and must be viewed with critical eyes, serious errors and misuse can create major problems for the organization. Therefore, always report any problems.

Use an AI Policy if guidelines are not enough

Use our AI Policy template if guidelines are not enough in your organization.

Tip! Please read our extensive FAQ on IT Security policies.

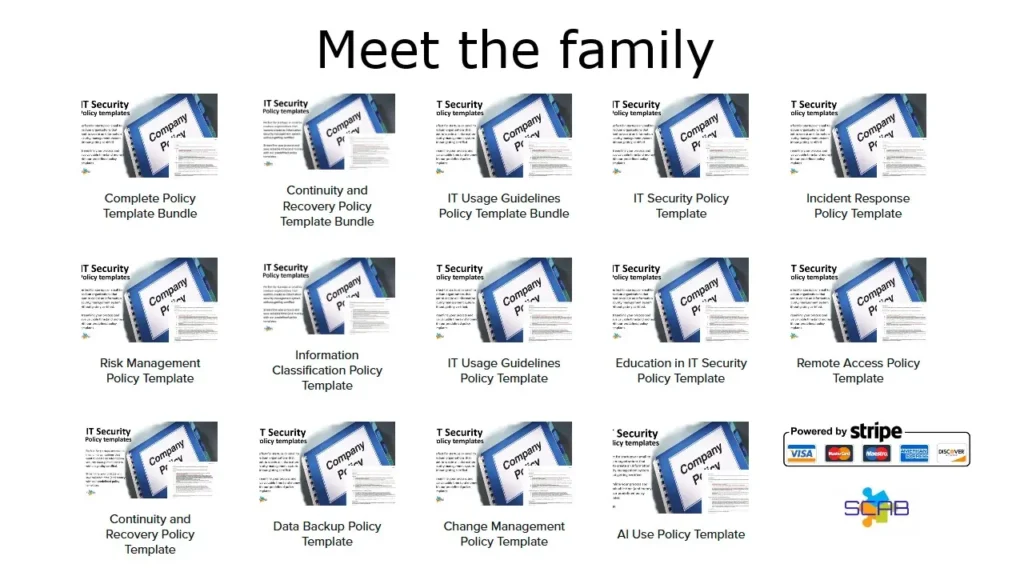

- IT Security Policy Templates

- Template – AI Guidelines (Free)

- Template – AI Policy

- Template – Backup and Restore Policy

- Template – Business Continuity and Recovery Policy

- Template – Change Management Policy

- Template – Incident Management Policy

- Template – Information Classification Policy

- Template – IT Security Policy

- Template – Policy for Risk Management

- Template – Policy for Use of IT Resources

- Template – Remote Access Policy

- Template – IT Security Training Policy

FAQ

We have compiled answers to the most common questions we usually get about AI Guidlines. Feel free to have a cup of coffee/tea and read in peace and quiet, feel free to contact us if you have any questions of your own about this.

What is the difference between policy and guideline?

In the complex world of IT security, it is critical to understand the difference between policies and guidelines, as they both serve distinct but complementary roles within an organization’s security framework.

Policy:

A policy is a formalized document that establishes the overall rules and principles for a specific area within the organization. In the context of IT security, the policy acts as the highest authority and is often of a strategic nature. It describes what is to be achieved and why it is important, providing a solid foundation that guides other documents and security practices. An IT security policy is binding and requires the following:

- Clarity and authority : Policies are clear in their determinations and have an authoritative tone that reflects the organization’s intentions and mandate.

- Governing documents : They act as umbrella documents that guide overall decisions and actions and must be adhered to by the entire organization.

- Long-term focus : Policy documents guide long-term goals and are moderately changeable to allow stability in the organization’s security efforts.

Guidelines:

Guidelines, on the other hand, are practical and detailed instructions or processes that indicate how the objectives of the policy can be achieved. These are more flexible and detailed than the policy itself and may need to be updated more frequently to adapt to technological advances or changes in the threat landscape. Some characteristics are:

- Flexibility and detail : They offer specific regulations and step-by-step instructions that are somewhat more flexible than policies.

- Operational tools : Guidelines underpin policies by facilitating day-to-day operations and supporting specific security measures.

- Shorter adaptation cycle : Guidelines have a faster rate of change to ensure they are always relevant and effective as technology or conditions change.

By integrating carefully crafted policies and guidelines, organizations can create a well-equipped line of defense against IT security threats. The dynamic balance between policy and guideline allows one to react nimbly and effectively to new challenges, all while maintaining a structured and strategic security strategy. It’s not just about protecting the present, but building a future where innovation can flourish in a safe environment. This understanding is fundamental to inspiring and guiding your organization towards a sustainable and reliable IT security culture.

How to implement AI Guidelines?

Successfully implementing IT security guidelines in an organization is an extensive and time-consuming project that requires a strategic and proactive methodology. Some important steps to ensure a smooth and efficient implementation:

- Assess current state: Before starting implementation, conduct a thorough analysis of current security measures and processes to identify gaps and areas for improvement. This evaluation provides valuable insight and serves as a baseline for the policy to be developed.

- Management involvement: Engage top management for their support and sanctioning of the security guidelines. The active participation of the leadership encourages the whole organization to see the seriousness of the policy and its implementation.

- Communication and awareness: Develop a communication plan to introduce guidelines to employees. Inform and train staff on the importance and content of IT security policies, as well as their responsibilities in their application.

- Technical and organizational integration: Coordinate with tech teams and other relevant departments to integrate guidelines with existing systems and work processes. Ensure necessary technology and tools are in place to support policy requirements.

- Training and support: Implement training programs to equip employees with the skills and knowledge to work in accordance with new guidelines. Ongoing support should be offered to help staff adapt and keep up to date with any changes.

- Monitoring and Compliance: Set up mechanisms to regularly review and monitor compliance with IT security guidelines. Analyze feedback and evaluation data to ensure guidelines are followed and organizational security goals are met.

- Development and improvement: IT security is a dynamic field that requires continuous updating of guidelines to keep pace with technological changes and emerging threats. Establish a routine for regular policy review and improvement to ensure long-term effectiveness and relevance.

By conveying clear guidelines and offering support throughout the organization’s structure, you create a robust IT security culture. This structure not only leads to a safer workplace, but also shows the way towards continuous improvement and innovation in line with technological developments. By investing in the implementation of effective and well-equipped IT security guidelines, you equip your organization for the future with confidence and strength.

How to create compliance with AI Guidelines?

Ensuring compliance with IT security guidelines is critical to protecting the organization’s digital assets and maintaining integrity, trust and security. Some insightful strategies to achieve sustainable compliance:

- Leadership and culture: Compliance starts at the top. Create a culture where IT security is part of the company’s DNA, with leaders leading by example. When security is prioritized by senior management, it will permeate the entire organization’s procedures and behaviors.

- Continuous training: Regular training initiatives and workshops are fundamental to keep all staff up to date on the meaning of guidelines and key principles. Interactive and scenario-based training programs can make the learning process more relevant and engaging.

- Monitoring infrastructure: Implement advanced monitoring systems and tools to continuously monitor compliance in real time. By using analysis and automation, deviations can be detected quickly, which makes it possible to react proactively and minimize risks.

- Skills building and support network: Build internal support teams and expert groups that can provide advice, solve problems and drive improvement initiatives. A dedicated security team can also act as a point of contact for questions and problems that may arise among employees.

- Incentives and rewards: Establish incentives to encourage employees to follow the organization’s IT security guidelines. Draw attention to and reward good safety behaviors, which can inspire the whole team and reinforce the desired actions.

- Regular audits and updates: Conduct regular audits to ensure that all IT security guidelines are still relevant and effective. It is important to participate in continuous improvement cycles to maintain a high safety standard in a dynamically developing environment.

- Transparent communication: Encourage open and transparent communication around IT security issues, cyber security issues and challenges. Employees should feel confident in reporting potential security risks or breaches early and know that their efforts will be considered and protected.

- Integrate compliance into KPIs: Make security and compliance part of the key performance indicators (KPIs) for departments and individuals. By making safety part of management, it becomes not just a mandatory task but an integrated part of the business goals.

Compliance is not a one-off exercise, but rather a continuous measure and an ever-present dimension of organizational culture. By adopting a holistic approach to implementing and achieving compliance, organizations can stand strong against future threats. This method confirms our ability to not only protect but also drive innovation in a safe and secure digital environment where future opportunities can be realized with trust and security at the forefront.

What types of artificial intelligence (AI) are there?

- Artificial Narrow Intelligence (Narrow AI) . Artificial narrow intelligence, sometimes called “weak AI”, refers to the ability of a computer system to perform a narrowly defined task better than a human. Narrow AI is the highest level of AI development that humanity has reached so far, and all examples of AI that you see in real life fall into this category, including autonomous vehicles and personal digital assistants. This is because even when it appears that AI is thinking for itself in real time, it is actually coordinating several tight processes and making decisions within a predefined framework. AI “thoughts” do not include consciousness or emotions.

- Artificial general intelligence (general AI). Artificial general intelligence, sometimes called “strong AI” or “human-level AI”, refers to the ability of a computer system to outperform humans in an intellectual task. It’s the kind of AI you see in movies where robots have conscious thoughts and act on their own motives. In theory, a computer system that has achieved general AI would be able to solve deeply complex problems, provide judgment in uncertain situations, and incorporate prior knowledge into its current reasoning. It would have human-level creativity and imagination and be able to perform many more tasks than narrow AI.

- Artificial Super Intelligence (ASI). A computer system that has achieved artificial superintelligence could surpass humans in almost every field, including scientific creativity, general wisdom, and social competence.

What is the difference between AI and generative AI?

Traditional AI refers to AI systems that can perform specific tasks by following predetermined rules or algorithms. They are primarily rule-based systems that cannot learn from data or improve over time. Generative AI, on the other hand, can learn from data and generate new data instances.